In brief: Microsoft will reveal its quarterly financial results on Wednesday, and it might not be a high point for the Redmond giant. Analysts expect Microsoft to report its slowest quarterly revenue growth in a year, and once again there are concerns about how much money the company is investing in AI when the demand and returns aren't justifying its outlay.

Microsoft has invested around $13 billion in ChatGPT-maker OpenAI since 2019, but we've been hearing reports since April that investors are concerned that reaping the financial rewards is taking longer than expected.

Reuters reports that Morgan Stanley analysts say there is a "wall of worry" around Microsoft's earnings due to "ramping capital expenditures, margin compression, lack of evidence on AI returns, and messiness post a financial resegmentation."

One of the biggest AI-related disappointments for Microsoft is Copilot. The company has been pushing its AI tools incredibly hard, going as far as adding a dedicated button to its latest laptops, but most people are apathetic toward Copilot, with the most common complaint reportedly that it's not as good as ChatGPT.

In September, Salesforce CEO Marc Benioff said Copilot is basically the new Microsoft Clippy, and that customers had not gotten value from it.

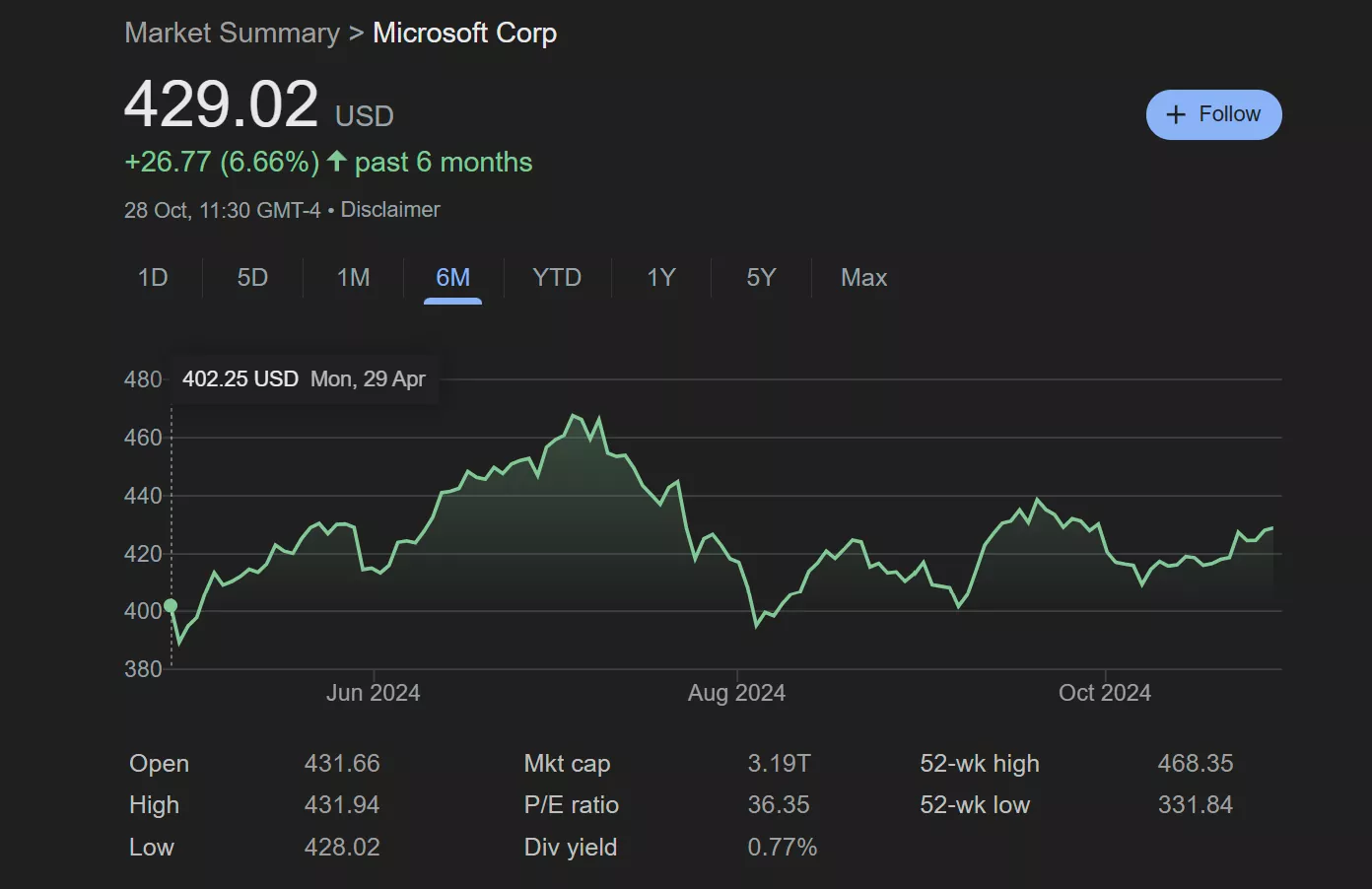

Microsoft's stock price over the last six months

A survey of 152 information technology companies carried out by research firm Gartner in August found that the majority of them had not progressed their Copilot initiatives past the pilot stage.

Microsoft could boost Copilot uptake through an enterprise tool it unveiled last week. Microsoft Copilot Studio lets clients build AI agents that can automate internal tasks, fulfilling the sorts of administrative roles usually performed by employees. That's obviously brought a lot of criticism about AI replacing human workers, though Microsoft claims it will automate tedious tasks, freeing employees to focus on other, more important things, like looking for a job before they're replaced, probably.

Microsoft's stock price is up 14% this year, but it has only risen around 1% since late July, underperforming the benchmark S&P 500. While the Azure cloud-computing unit likely grew by 33% in the fiscal first quarter, matching company expectations, it is lower than in the fourth quarter.

Microsoft's total revenue is expected to have risen 14.1% to $64.51 billion. It says that spending on AI technology will remain high.

It was reported last week that Microsoft CEO Satya Nadella has seen his take-home pay increase by 63% compared to last year despite the CEO requesting the amount he receives be reduced. While Nadella's salary was cut by 50%, other forms of his compensation increased significantly.

Microsoft faces scrutiny over AI spending as Copilot adoption lags